India, 13 December 2018 – MathWorks today introduced Sensor Fusion and Tracking Toolbox, which is now available as part of Release 2018b.The new toolbox equips engineers working on autonomous systems in aerospace and defense, automotive, consumer electronics, and other industries with algorithms and tools to maintain position, orientation, and situational awareness. The toolbox extends MATLAB based workflows to help engineers develop accurate perception algorithms for autonomous systems.

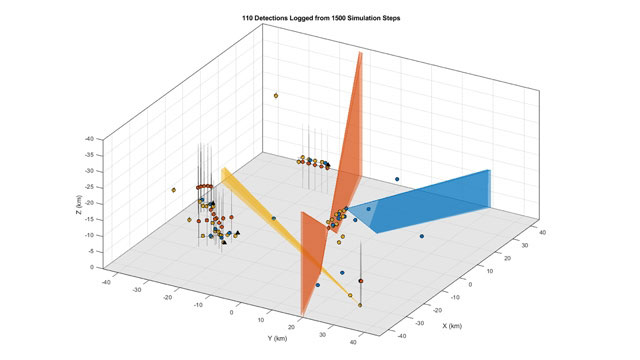

Engineers working on the perception stage of autonomous system development need to fuse inputs from various sensors to estimate the position of objects around these systems. Now, researchers, developers, and enthusiasts can use algorithms for localization and tracking, along with reference examples within the toolbox, as a starting point to implement components of airborne, ground-based, shipborne, and underwater surveillance, navigation, and autonomous systems. The toolbox provides a flexible and reusable environment that can be shared across developers. It provides capabilities to simulate sensor detections, perform localization, test sensor fusion architectures, and evaluate tracking results.

Algorithm designers working on tracking and navigation systems often use in-house tools that may be difficult to maintain and reuse,” said Paul Barnard, Marketing Director – Design Automation, MathWorks. “With Sensor Fusion and Tracking Toolbox, engineers can explore multiple designs and perform ‘what-if analysis’ without writing custom libraries. They can also simulate fusion architectures in software that can be shared across teams and organizations.”

Sensor Fusion and Tracking Toolbox includes:

· Algorithms and tools to design, simulate, and analyze systems that fuse data from multiple sensors to maintain position,

· Reference examples that provide a starting point for airborne, ground-based, shipborne, and underwater surveillance, navigation, and autonomous systems

· Multi-object trackers, sensor fusion filters, motion and sensor models, and data association algorithms that can be used to evaluate fusion architectures using real and synthetic data

· Scenario and trajectory generation tools

· Synthetic data generation for active and passive sensors, including RF, acoustic, EO/IR, and GPS/IMU sensors

· System accuracy and performance standard benchmarks, metrics, and

· Deployment options for simulation acceleration or desktop prototyping using C-code generation

To learn more about Sensor Fusion and Tracking Toolbox, visit: mathworks.com/products/sensor-